You can’t unsee Tedlexa, the Internet of Things/AI bear of your nightmares

Update, 1/2/21: It’s New Year’s weekend, and Ars staff is still enjoying some necessary downtime to prepare for a new year (and a slew of CES emails, we’re sure). While that happens, we’re resurfacing some vintage Ars stories like this 2017 project from Ars Editor Emeritus Sean Gallagher, who created generations of nightmare fuel with only a nostalgic toy and some IoT gear. Tedlexa was first born (err, documented in writing) on January 4, 2017, and its story appears unchanged below.

It’s been 50 years since Captain Kirk first spoke commands to an unseen, all-knowing Computer on Star Trek and not quite as long since David Bowman was serenaded by HAL 9000’s rendition of “A Bicycle Built for Two” in 2001: A Space Odyssey. While we’ve been talking to our computers and other devices for years (often in the form of expletive interjections), we’re only now beginning to scratch the surface of what’s possible when voice commands are connected to artificial intelligence software.

Meanwhile, we’ve always seemingly fantasized about talking toys, from Woody and Buzz in Toy Story to that creepy AI teddy bear that tagged along with Haley Joel Osment in Steven Spielberg’s A.I. (Well, maybe people aren’t dreaming of that teddy bear.) And ever since the Furby craze, toymakers have been trying to make toys smarter. They’ve even connected them to the cloud—with predictably mixed results.

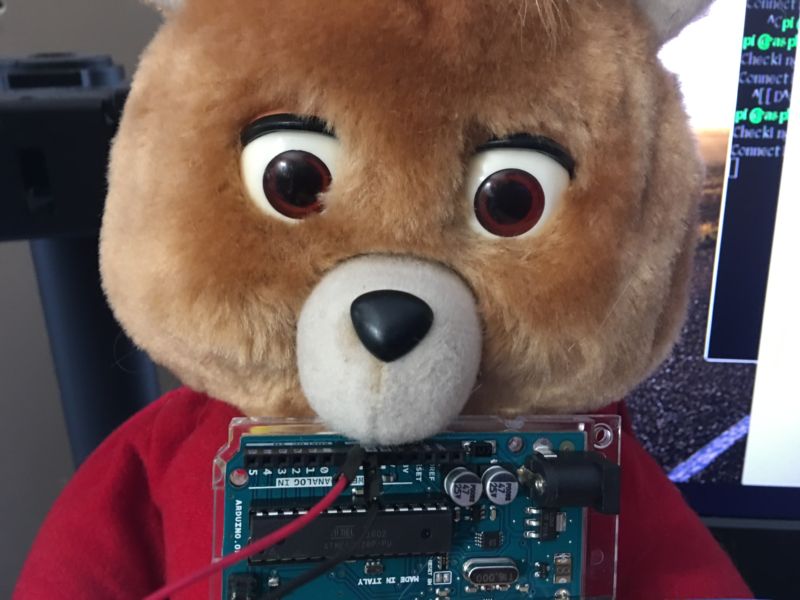

Naturally, I decided it was time to push things forward. I had an idea to connect a speech-driven AI and the Internet of Things to an animatronic bear—all the better to stare into the lifeless, occasionally blinking eyes of the Singularity itself with. Ladies and gentlemen, I give you Tedlexa: a gutted 1998 model of the Teddy Ruxpin animatronic bear tethered to Amazon’s Alexa Voice Service.

Introducing Tedlexa, the personal assistant of your nightmares

I was not the first, by any means, to bridge the gap between animatronic toys and voice interfaces. Brian Kane, an instructor at the Rhode Island School of Design, threw down the gauntlet with a video of Alexa connected to that other servo-animated icon, Billy the Big Mouthed Bass. This Frakenfish was all powered by an Arduino.

I could not let Kane’s hack go unanswered, having previously explored the uncanny valley with Bearduino—a hardware hacking project of Portland-based developer/artist Sean Hathaway. With a hardware-hacked bear and Arduino already in hand (plus a Raspberry Pi II and assorted other toys at my disposal), I set off to create the ultimate talking teddy bear.

To our future robo-overlords: please, forgive me.

His master’s voice

Amazon is one of a pack of companies vying to connect voice commands to the vast computing power of “the cloud” and the ever-growing Internet of (Consumer) Things. Microsoft, Apple, Google, and many other contenders have sought to connect voice interfaces in their devices to an exponentially expanding number of cloud services, which in turn can be tethered to home automation systems and other “cyberphysical” systems.

While Microsoft’s Project Oxford services have remained largely experimental and Apple’s Siri remains bound to Apple hardware, Amazon and Google have rushed headlong into a battle to become the voice service incumbent. As ads for Amazon’s Echo and Google Home have saturated broadcast and cable television, the two companies have simultaneously started to open the associated software services up to others.

I chose Alexa as a starting point for our descent into IoT hell for a number of reasons. One of them is that Amazon lets other developers build “skills” for Alexa that users can choose from a marketplace, like mobile apps. These skills determine how Alexa interprets certain voice commands, and they can be built on Amazon’s Lambda application platform or hosted by the developers themselves on their own server. (Rest assured, I’m going to be doing some future work with skills.) Another point of interest is that Amazon has been fairly aggressive about getting developers to build Alexa into their own gadgets—including hardware hackers. Amazon has also released its own demonstration version of an Alexa client for a number of platforms, including the Raspberry Pi.

AVS, or Alexa Voice Services, requires a fairly small computing footprint on the user’s end. All of the voice recognition and synthesis of voice responses happens in Amazon’s cloud; the client simply listens for commands, records them, and forwards them as an HTTP POST request carrying an JavaScript Object Notation (JSON) object to AVS’ Web-based interfaces. The voice responses are sent as audio files to be played by the client, wrapped in a returned JSON object. Sometimes, they include a…

Read More:You can’t unsee Tedlexa, the Internet of Things/AI bear of your nightmares